This Tool Can Track Environmental Cost of Your ML Model

Energy consumption is a major factor to plan for when implementing a long-term project or service that uses large-scale machine learning algorithms.

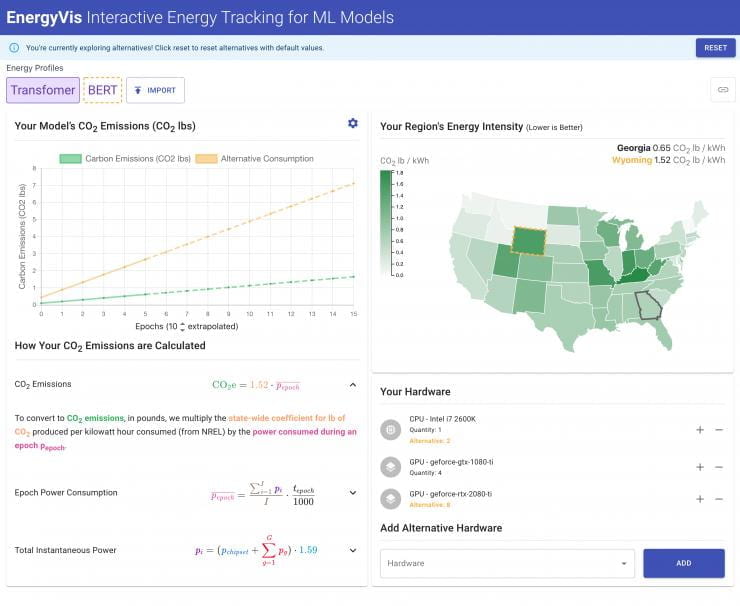

Now a team of researchers from Georgia Tech has created an interactive tool called EnergyVis that allows users to compare energy consumption across locations and against other models.

Paper: EnergyVis: Interactively Tracking and Exploring Energy Consumption for ML Models (Omar Shaikh, Jon Saad-Falcon, Austin P. Wright, Nilaksh Das, Scott Freitas, Omar Asensio, Duen Horng Chau)

Georgia Tech Research Shows Listening to Heartbeats ‘Boosts’ Empathy

Lab study demonstrates new auditory technique to change emotional perception and connection

Not judging someone until you’ve walked a mile in their shoes is an old saying that can remind people to have more empathy. Georgia Tech has a new twist on this old advice: Listen to their heartbeat.

Researchers hypothesized that hearing the heartbeat of another person would increase the listener’s empathic connection with them.

Perfecting Your Glittery Smokey-Eye with Mixed Media User Interface

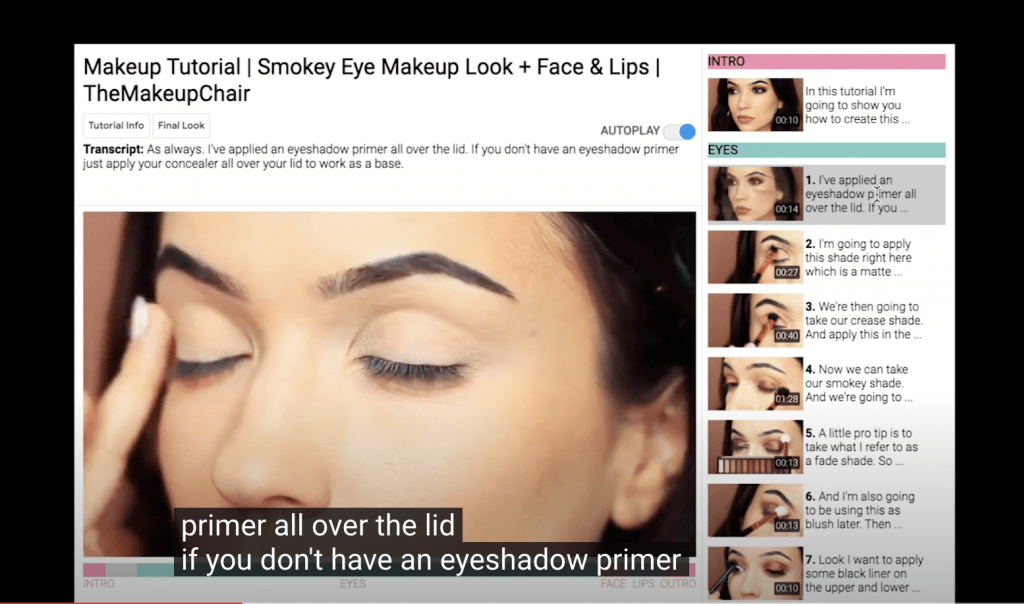

YouTube has made watching videos one of the most popular methods for learning a new skill. However, some users might find it frustrating or difficult to see what steps will be covered in a given video or where to find a specific step in the process.

Machine Learning Center Executive Director Irfan Essa and research scientists at Google Research and Stanford University teamed up to create a user-friendly and accessible interface for video watchers.

Using computer vision techniques, the interface creates a hierarchical tutorial where users can see all of the steps organized by project area and click on the area of interest.

A makeup tutorial, for example, will be broken into sections based on the facial area (eyes, nose, lips, cheeks, etc.) and the steps associated with each facial area (put this color eyeshadow on at the crease, put eyeliner above and below waterline.)

“In the case for make-up videos, we use the actions, words, objects used, and parts of the face to do this segmentation. Our goal was to show how video can be used for a detailed analysis and create an interactive experience,” said Essa.

The interface is also voice-enabled, allowing users to search the video by saying phrases like “go to step 9” or “show me where to put eyeshadow.” Users can also more easily fast-forward or replay specific sections.

This mixed-media approach will allow users to more easily follow along with instructional videos at their own pace and more quickly master the skill set.

Paper: Automatic Generation of Two Level Hierarchical Tutorials from Instructional Makeup Videos (Anh Truong, Peggy Chi, David Salesin, Irfan Essa, Maneesh Agrawala)

Writer/Contact: Allie McFadden, Communications Officer

New Language Model Uses Texts to Predict How Groups of People Perceive AI agents

New Georgia Tech research shows that text chats between people and an AI agent can be used to automatically predict perceptions that a group of users (e.g. a class of students) have about the AI agent – specifically attributes for how human-like, intelligent, and likeable the AI is.

The research team analyzed linguistic cues (e.g. diversity and readability of the messages) in text chats that users sent to Jill Watson, the AI agent used in several Georgia Tech online computer science graduate courses. Leveraging these linguistic cues extracted from the messages, the researchers were able to build a model that can predict the community’s perception of Jill.

Paper: Towards Mutual Theory of Mind in Human-AI Interaction: How Language Reflects What Students Perceive About a Virtual Teaching Assistant (Qiaosi Wang, Koustuv Saha, Eric Gregori, David Joyner, Ashok Goel)